Hello there. I have written this post quite a while ago and kept it as draft for like four months.

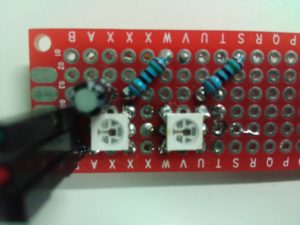

So, as discussed in previous post I own a FadeCandy controller and I’ve ordered some WS2812B led strips at eBay. Cheap leds from China. US$ 15 for 5 meters of 30 led/meter led strip. Ordering stuff in China takes about a month to arrive, but it’s dirt cheap. And with the free shipping they offer, I often wonder how they even make the postage costs out of it. Is the mail for free in China?

Anyways, I stuck the led strips to the shelves above my TV and hooked them up to the FadeCandy controller. The length is 2 meter per strip, and I have two of them. The FadeCandy controller can control up to 8 strips with a maximum 64 leds per strips. So I intend to hook up my two strips to the controller, but to begin with, I’ve connected only one. Mainly due power limitations, as for now I am powering it through a powered USB hub. A Sitecom 4 port port USB hub, which comes with a 1 A power supply. (According to USB specs, it should be 4 x 0.5 = 2 Amps)

I started the FadeCandy server with default configuration and ran one of the examples. As they are configured for some 2D array of leds, the effects they produce don’t make sense. However, just to see if it works, that is not an issue.

Running the demo makes the leds light up with some effects, so everything seems to work fine. However, when I stopped the demo, I noticed some leds blinking. When I look at the FadeCandy page on AdaFruit, I noticed it says “Dithering USB-Controlled Driver for RGB NeoPixels”. Temporal dithering, I presume. So that explains what I am seeing. Fortunately, this can be disabled by adding the following to the config file

"dither" : false, "interpolate" : false,

So after this initial test, I proceeded to extend the test code I’ve shown in my first post. I would like to create a running rainbow effect. I would like a constant brightness, but changing colours. Googeling for this constant brightness problem, I’ve stumbled across an algorithm that converts from HSV to RGB colourspace. Running this code gives me a nice fading rainbow. Changing the hue, keeping the saturation and brightness constant.

int index;

while (true) {

for (int i = 59; i; i--) {

data.leds[i] = data.leds[i-1];

}

// hue, sat, brightness

hsb2rgbAN2(index+=8%768, 255, 255, data.leds);

send (Socket, &data, sizeof(data),0);

usleep(50000);

}

However, it’s rather bright, so I turned the brightness parameter down a little. However, this gives not the desired result. The nice fading effect is gone, it looks like separate colours running. With lower values, it even goes down to just red, green and blue parts, with dark in between. Looking at the FadeCandy product page again, it says “Firmware that uses unique dithering and color correction algorithms to raise the bar for quality while getting out of the way of your creativity.” Colour correction…. that’s the problem I suppose. I based my configuration on the default configuration, which included

"color": {

"gamma": 2.5,

"whitepoint": [1.0, 1.0, 1.0]

},

Removing that from my configuration fixes the problem, and gives me a right fading rainbow even at low brightness.

When reading up about the WS2812 LEDs, I discovered there is a clone, SK6812, which is better then the original. The SK6812 uses a PWM frequency of 800 KHz, while the WS2812 only uses 400 KHz. So, I decided to look for the SK6812 and then I found out there is a variant, which next to the red, green and blue led, also contain a white led. The RGBW variants are not supported by the FadeCandy controller.

That’s when I decided to roll my own implementation. So, I started looking around for some libraries which can control those leds. Amongst the libraries I’ve found was FastLED. It supports a wide range of LEDs. At top of their supported list, they list the APA102, and recommend it. This APA102 also has a clone, the SK9822. Where the APA102 has a PWM frequency of 19.2 KHz, the SK9822 only has 4.7 kHz. However, these leds support dimming. When dimming, the APA102 puts another PWM signal over the 19.2 KHz signal, at a much lower rate: 440 Hz. The SK9822 on the other hand uses a current source to apply the dimming.

I’ll save further details for the next post, as this post has been a draft for way too long now, and I am getting into details… and I am about to explain some more details…

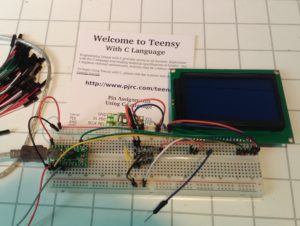

RGB leds that can be daisy chained, and are used in for example LED strips. The FadeCandy is a controller that is connected to a computer over an USB interface, and up to 8 chains of WS2812 leds.

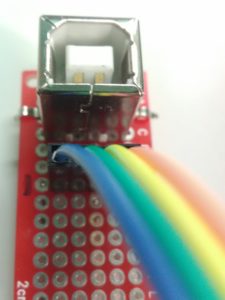

RGB leds that can be daisy chained, and are used in for example LED strips. The FadeCandy is a controller that is connected to a computer over an USB interface, and up to 8 chains of WS2812 leds. The customised part of this board is there is a pin header in stead of a mini/micro USB connector. As for that, it’s not simply plug in in a cable, thus I made a simple breakout board to connect a USB cable. I had some USB B female PCB connectors laying around, so I used one of those to connect the FadyCandy to USB.

The customised part of this board is there is a pin header in stead of a mini/micro USB connector. As for that, it’s not simply plug in in a cable, thus I made a simple breakout board to connect a USB cable. I had some USB B female PCB connectors laying around, so I used one of those to connect the FadyCandy to USB.